Does technology works for people or the other way around? We are creative, collaborative, inventive beings but we’re also social, easily influenced, and normative. What we’ve imagined ourselves “creating” has often been more of a prison we’ve build around ourselves.

Consider Yuval Noah Harari’s provocative idea that wheat domesticated people rather than the other way around.

Think for a moment about the Agricultural Revolution from the viewpoint of wheat. Ten thousand years ago wheat was just a wild grass, one of many, confined to a small range in the Middle East. Suddenly, within just a few short millennia, it was growing all over the world. According to the basic evolutionary criteria of survival and reproduction, wheat has become one of the most successful plants in the history of the earth.

In areas such as the Great Plains of North America, where not a single wheat stalk grew 10,000 years ago, you can today walk for hundreds upon hundreds of kilometers without encountering any other plant. Worldwide, wheat covers about 2.25 million square kilometers of the globe’s surface, almost ten times the size of Britain. How did this grass turn from insignificant to ubiquitous?

Wheat did it by manipulating Homo sapiens to its advantage. This ape had been living a fairly comfortable life hunting and gathering until about 10,000 years ago, but then began to invest more and more effort in cultivating wheat. Within a couple of millennia, humans in many parts of the world were doing little from dawn to dusk other than taking care of wheat plants. It wasn’t easy. Wheat demanded a lot of them. Wheat didn’t like rocks and pebbles, so Sapiens broke their backs clearing fields. Wheat didn’t like sharing its space, water, and nutrients with other plants, so men and women labored long days weeding under the scorching sun. Wheat got sick, so Sapiens had to keep a watch out for worms and blight. Wheat was defenseless against other organisms that liked to eat it, from rabbits to locust swarms, so the farmers had to guard and protect it. Wheat was thirsty, so humans lugged water from springs and streams to water it. Its hunger even impelled Sapiens to collect animal feces to nourish the ground in which wheat grew.

The body of Homo sapiens had not evolved for such tasks. It was adapted to climbing apple trees and running after gazelles, not to clearing rocks and carrying water buckets. Human spines, knees, necks, and arches paid the price. Studies of ancient skeletons indicate that the transition to agriculture brought about a plethora of ailments, such as slipped disks, arthritis, and hernias. Moreover, the new agricultural tasks demanded so much time that people were forced to settle permanently next to their wheat fields. This completely changed their way of life. We did not domesticate wheat. It domesticated us. The word “domesticate” comes from the Latin domus, which means “house.” Who’s the one living in a house? Not the wheat. It’s the Sapiens.

Yuval Noah Harari, Sapiens: A Brief History of Humankind

When I consider what I spend my own time on, quite a bit of it feels like it’s me working for my technology. I receive hundreds of emails a day, which somehow I’m to blame for, most of which I never open, but still require quite a lot of time sifting through, managing, responding, adding things to my calendar, and in the saddest part of the manipulation, checking to see if there’s more. And even with all that dedication to its maintenance, every so often I will miss email that matters to me, and fail to respond to someone or to take an action in my interest.

I have ADD, so email is not all I forget. Even if I wasn’t enticed by the badges and notifications on my phone (which are mostly turned off- I can’t imagine what it would be like if they were all allowed) I forget what I went upstairs to get on a pretty regular basis. I will literally speak out loud “don’t forget to x” and an hour or a day later be like, “how did I forget that thing?” To some extent, this is a product of loving to do a lot of things and having many things to remember. Some of it is my biology.

But when I have tried to use technology to solve this problem, it inevitably fails. And part of the reason, I think, is that using apps produces too explicitly a feeling of having a new boss in the form of some checklist, chart, or calendar.

List interfaces inevitably lead to to-dos too numerous to fit into the course of my waking hours, and what Steven Pressfield has so eloquently outlined in a few books, The Resistance, seems to just feed on the notion of having a time set by me to do things that only I am accountable for, which is like 80% of creative work. Suddenly I find myself in that “just one more little thing and then I will do the thing I scheduled” mode, or you know, reading Slack messages. (Slack being another prime example of a technology I’m working for without compensation).

So here’s the question. It seems plausible that there’s a near future when I can assign AI to sort through my email, to keep me abreast of information I’m interested in, to help me avoid missing an event on my calendar, to do all the work for other technology I’m currently volunteering for. But I have a sneaking suspicion that this line of thinking may lead to something even worse.

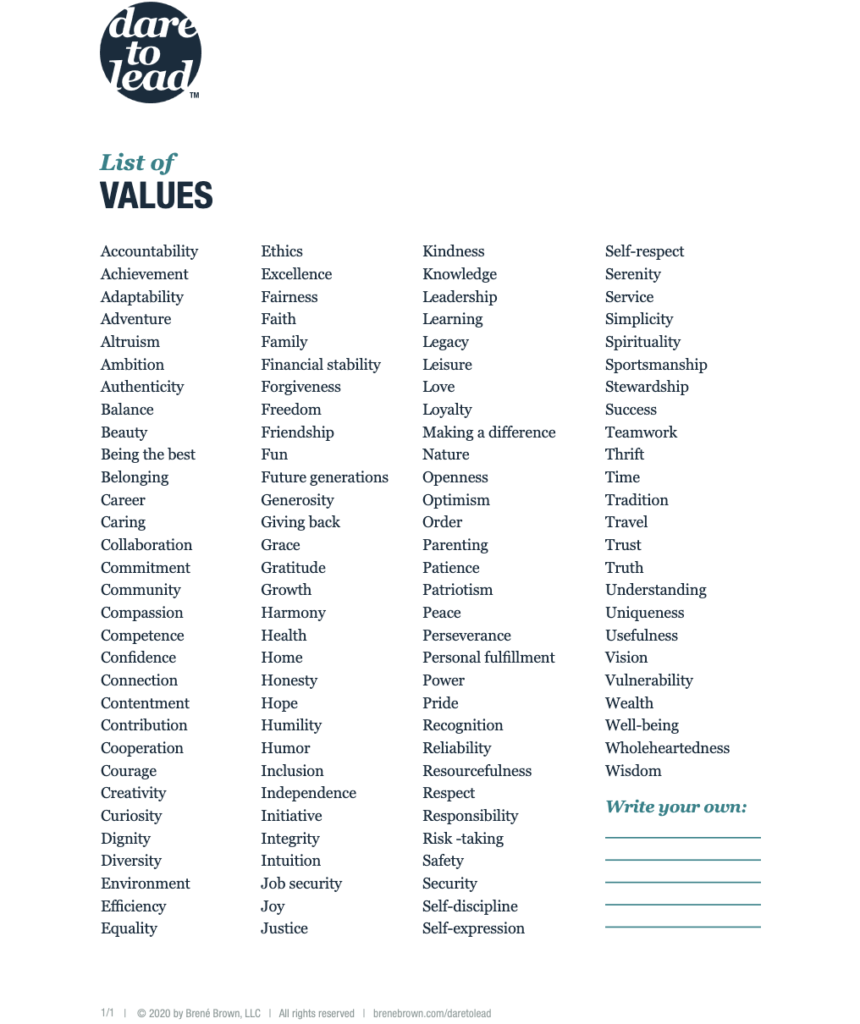

When I am in goal-setting conversations (BASB, communities, coaching, ‘productivity porn’) what I notice is how much of what people aspire to feels kind of… unconsidered? Why is this thing important to you? And of course, we live in a culture where people like me who actually can self-determine what they want to do have this privilege as a result of the mostly non-self-determined work of other people.

I suspect we all mostly want to feel loved, like we matter, and like what we’re doing with our time has utility or even service to others. Deep down, under the stories about having stuff, or being fit, or being enlightened, or whatever your flavour of goal looks like, this is what humans crave when their physical needs are met and they have done the work of healing from trauma.

Will technology make it easier to be in that state or harder? So far, every new “age” seems to be filled with innovation that largely takes us out of that state. So being hopeful about AI does feel a little naïve.

But perhaps what’s going on isn’t a problem with technology itself but with the violence of the systems feeding it, and that it reinforces? What would it look like for technology to emerge from people and communities who have done the work to heal and to recognise the deep trauma of living within “imperialist white supremacist heteropatriarchy.”

Even in the more “progressive” spaces I’m in of tech makers, it’s mostly advantaged people (men, whites, westerners, elite-collecge-educated) that predominate. I mean, I am sure there are spaces I’m not in where there are technologists with different demographics (please invite me if I’m welcome!), but looking at the leadership in global tech and the funding numbers, it’s hard to imagine that AI’s current developers are not continuing to operate with pretty gigantic blind spots.

Still, I find myself pretty seduced by the idea of externalizing my executive function to the degree that I can remember things without having to remember to remember them- but what happens when I outsource the process of deciding what exactly is worth remembering? In the end, the answer is probably “embrace forgetting” and appreciate where I am and what’s around me without the endless overlord of achievement made manifest in code.